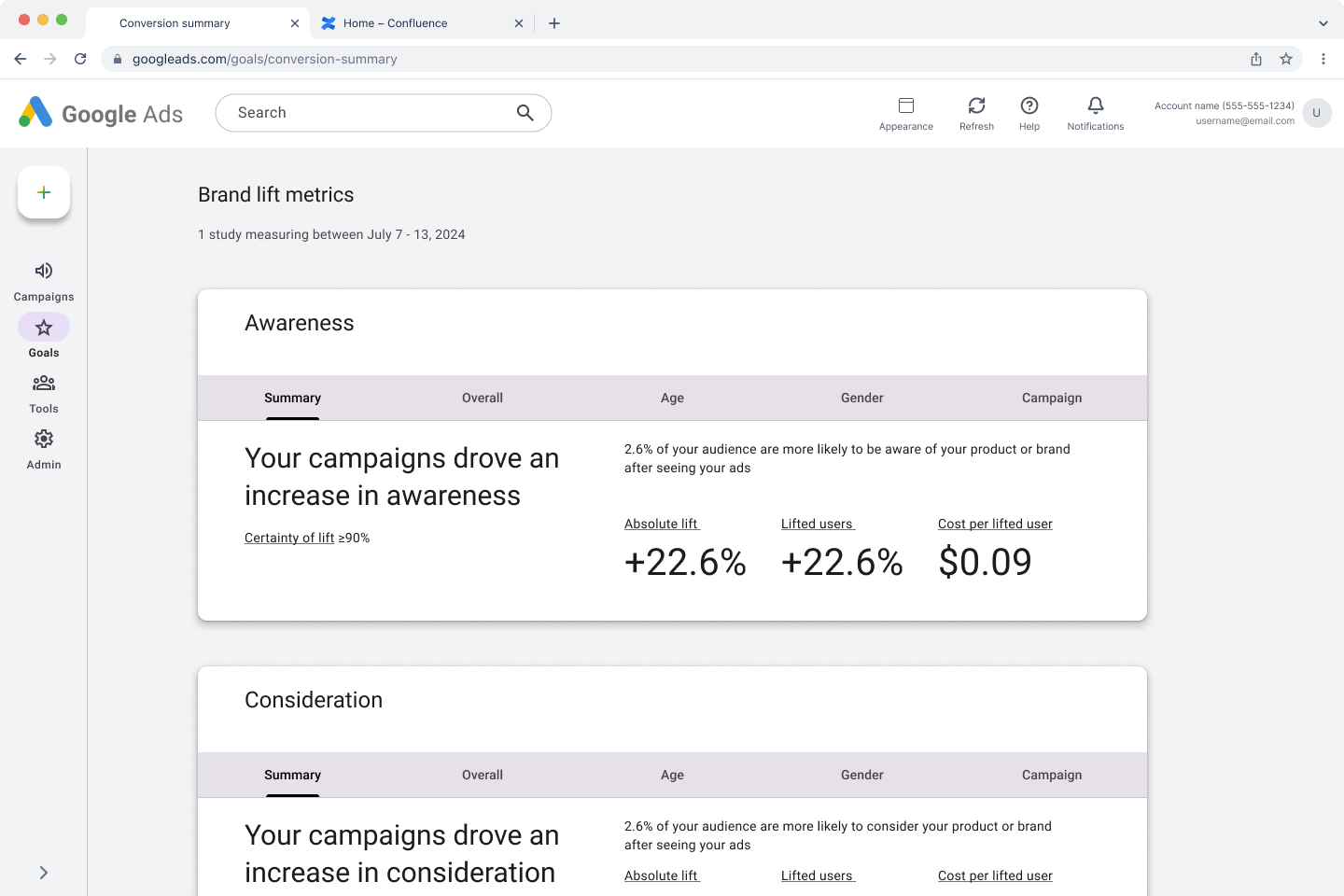

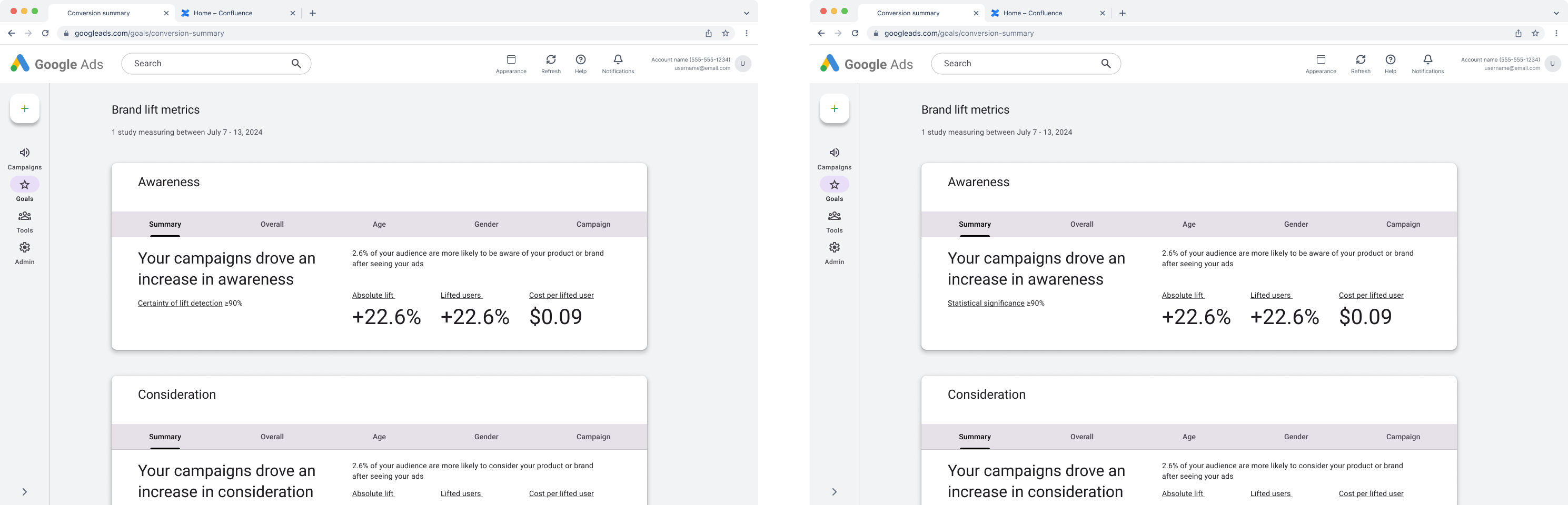

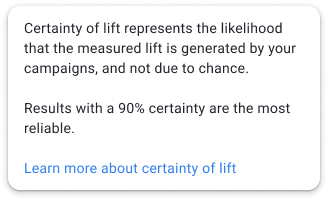

The launched update to brand lift results includes a new metric called "Certainty of Lift," which indicates the likelihood that their campaigns generated lift.

Brand lift campaigns on Google Ads rarely achieved statistical significance, leaving advertisers with vague insights like "Your campaign drove an increase in awareness." We proposed lowering the statistical threshold and displaying confidence levels directly in the product—giving advertisers transparency about result reliability while making more campaigns actionable.

I lead the content design and messaging to make this complex statistical concept accessible to both novices and experts. The updates significantly improved customer satisfaction scores and renewed engagement with brand lift campaigns.

Team

UX Designers, Product Managers, Software Engineers, and Program Managers

Company

Google, Google Ads

Our content design principles

Make statistics human by translating terminology into clear, trustworthy language

Put information where it belongs

Validate language choices through research rather than following others

Serving two important but distinct users

Our research identified two distinct user groups within the brand marketer audience, each with different needs and capabilities. Understanding both user types was essential for creating inclusive content.

Stats expert

- Works as a brand marketer or data scientist.

- Took advanced math or statistics courses.

- Interprets data and results with confidence.

- Serves as the team's go-to resource for understanding statistical implications.

Stats novice

- Works as a brand marketer

- Has limited recent statistics experienceRelies on data science colleagues for interpretation

- Benefits from simplified explanations and educational resources

Conducting a literature review

I began by examining existing research and documentation to understand previous approaches and establish context. The literature review encompassed past research studies, brand guidelines, naming conventions, educational materials, and empirical studies. Literature review revealed valuable patterns but no definitive naming solutions.

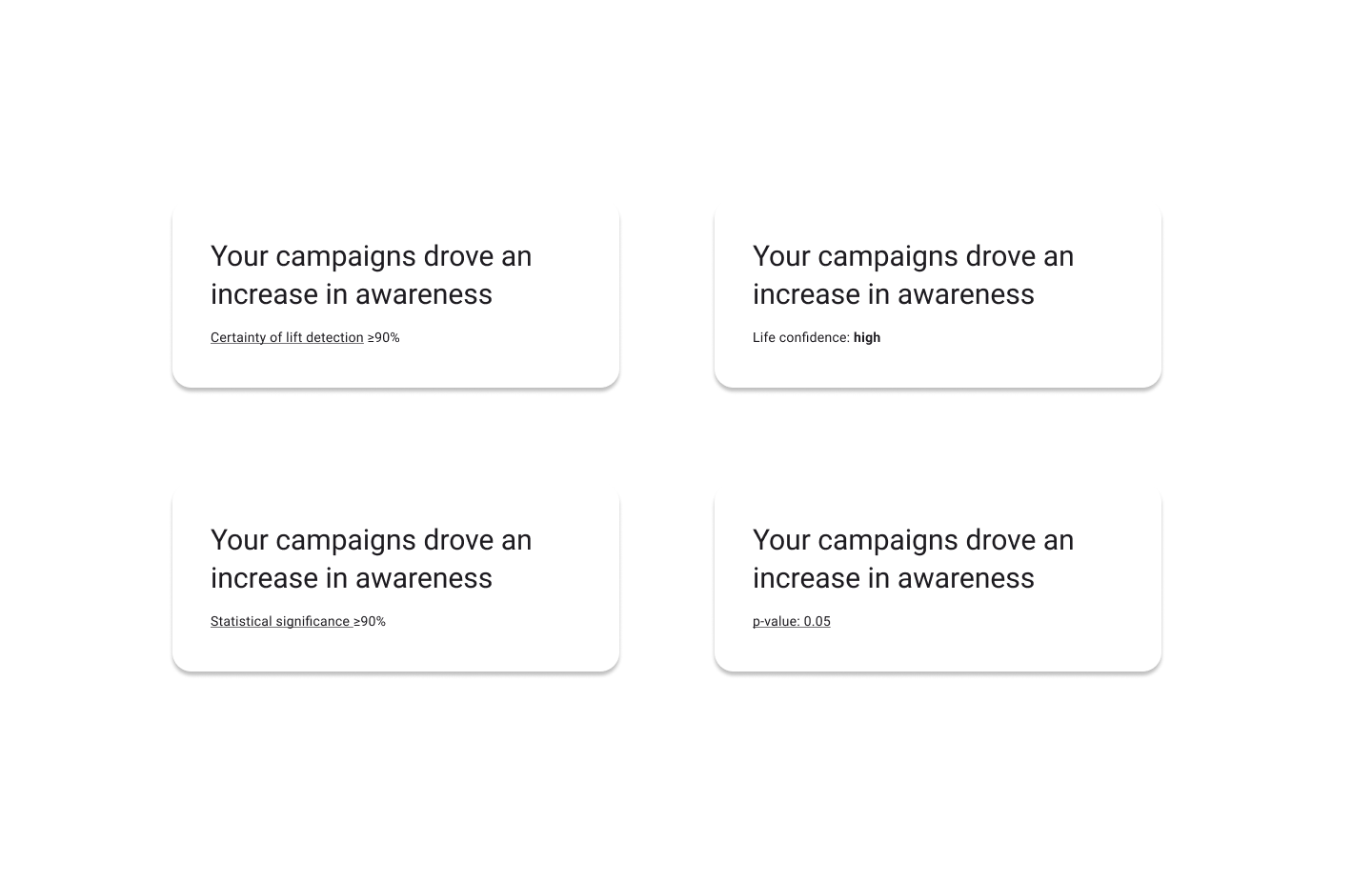

Exploring the different options for a name

The team had explored several naming options before my involvement. After conducting the literature review and consulting design guidelines, I proposed "certainty of lift," though stakeholders initially expressed skepticism about this terminology.

Our potential names emerged from cross-functional collaboration. Content, engineering, product management, design, and data science contributed to the list.

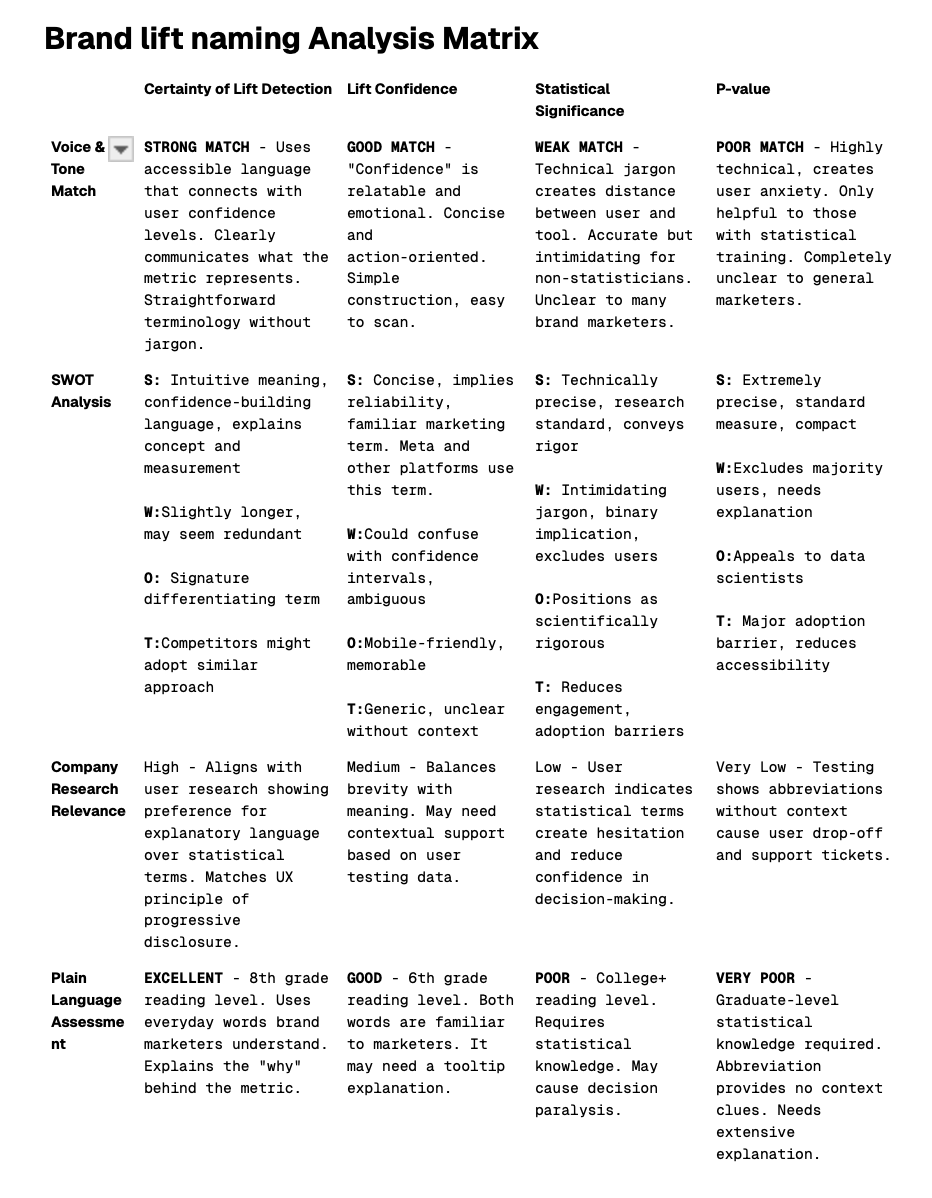

To move beyond subjective preferences, I developed a structured evaluation framework. Each option was assessed against established guidelines, user needs, and plain language principles, ensuring our decision would be grounded in objective criteria rather than personal opinions.

Systematic analysis helped evaluate options against multiple criteria: Google’s voice and tone and naming guidelines, a SWOT analysis, and plain language assessment.

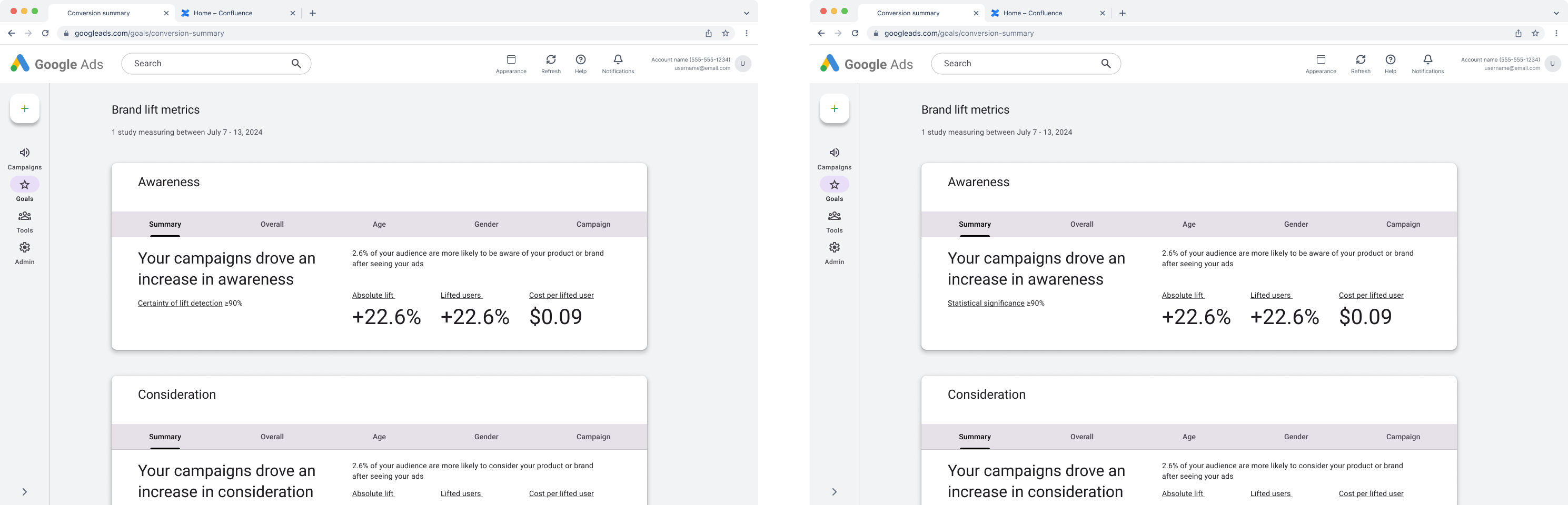

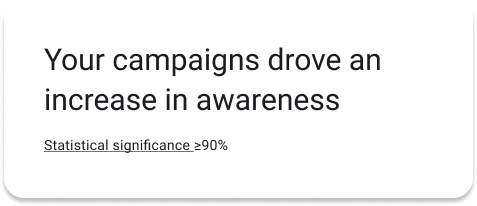

The analysis narrowed our options to two candidates: "statistical significance" (favored by data science stakeholders) and "certainty of lift detection" (aligned with content standards). User research would determine the final choice.

Certainty of lift detection and statistical significance ended up being two working options.

Testing through research

Effective feature names should be self-explanatory. While tooltips and help documentation provide support, the primary terminology should communicate clearly without additional explanation.

I partnered with UX researchers to design a study comparing both terminology options. Participants viewed identical interfaces with different terminology, then explained their understanding of the metrics and their implications for campaign decisions.

The drafted research study ensured the in-product content would be thoroughly validated and that both user groups understood the metric and metric group without needing to reference tooltips or additional educational information.

The participants were divided into two groups: one would see "statistical significance" and the other would see "Certainty of lift detection," and both groups would be asked to explain what the term means to them.

Results strongly favored "certainty of lift detection" across all user segments. Notably, even statistically sophisticated users found "statistical significance" ambiguous in this context. We further simplified it to "certainty of lift" based on these findings.

Statsitical signficance

- More stats savvy users preferred this because they uses this language today

- Less stats savvy users preferred this because it seemed more technical and exact

- More universal term, specific to stats

- Most users could not explain how it's calculated

Certiantiy of lift detection

- More specific term to lift

- Users deduced the meaning by using context clues

- Lift detection means they got lift, and certainty is how accurate the results are

- Both more and less stats savvy users preferred this because it was easier to understand and explain to others

We launched this experience to a cohort of 50 advertisers. Prior to the launch, the team wanted to validate the experience’s usability and confirm users correctly comprehended the new metric. Before a market release, our research partners conducted a quantitative study to measure and validate key user perceptions. The survey included questions to specifically confirm that this initial cohort interpreted the new metric as we intended. This provided the data-backed signal we needed to de-risk the launch.

Out of 132 respondents, 15 participants selected the incorrect question. While this indicated an opportunity to improve our educational content, the survey confirmed that the metric naming was effective.

Creating an easy-to-read page

With validated terminology, I focused on designing the complete user experience. The goal was to make statistical results both comprehensible and actionable for decision-making.

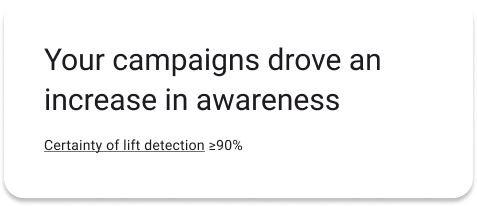

I explored approaches that combined metric definitions with contextual guidance based on confidence levels, moving beyond simple percentage displays to provide meaningful interpretation.

A few iterations of the headline and subhead copy for the Star tag.

Research revealed two primary use cases: some advertisers required statistical validation for major decisions, while others sought directional insights for ongoing optimization. Our content strategy needed to accommodate both scenarios effectively.

To align stakeholders on tooltip language, I developed two versions—one using plain language and another with technical terminology. Demonstrating that the accessible version maintained accuracy while improving comprehension secured stakeholder approval.

Balanced clarity with accuracy to ensure both technical and non-technical users could understand and trust the results.

The project delivered measurable improvements: over 50% of users found brand lift campaigns easy to use, and 90% understood "Certainty of lift."

Key learnings: Complex concepts don't require complex language—simple terminology that resonates with users can maintain technical accuracy while bridging knowledge gaps. Showing results below traditional significance thresholds proved valuable, with 70% of users preferring directional insights over no information. Most importantly, user research revealed that terminology seeming "oversimplified" to internal stakeholders was optimal for actual users—validating evidence-based content decisions over intuition.